OpenAI, the organization behind groundbreaking AI models like GPT and DALL·E, requires cutting-edge hardware to handle the massive computations involved in training and running these sophisticated models. But what exactly powers OpenAI’s AI systems? In this article, we’ll explore the hardware behind OpenAI, the chips used by OpenAI, and whether the company will shift from NVIDIA to AMD chips in the future.

Table of Contents

ToggleWhat Hardware Runs OpenAI?

To support its ambitious AI models, OpenAI relies on some of the most advanced data center hardware available. The sheer scale of the computations involved in training models like GPT and the more recent iterations demands a highly specialized infrastructure. Key components of the hardware that runs OpenAI include graphics processing units (GPUs), high-performance computing systems, and custom-built chips.

At the heart of OpenAI’s infrastructure are powerful GPU clusters designed for parallel processing, which is essential for training deep learning models. These GPUs are housed in massive server farms, distributed across cloud platforms, and specialized AI supercomputers. The scale of these systems is enormous, with the equivalent of thousands of GPUs running in parallel to handle the high-volume workloads associated with AI model training and inference.

What Chip Does OpenAI Use?

OpenAI primarily uses NVIDIA chips for its AI models. NVIDIA has long been the leader in the field of AI hardware, thanks to its GPUs designed for parallel processing tasks like machine learning and deep learning. Specifically, OpenAI uses NVIDIA A100 GPUs for training and inference tasks.

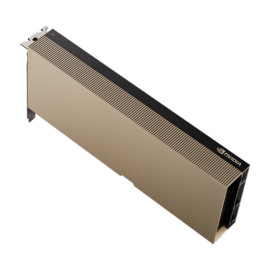

NVIDIA A100 GPU

The A100 Tensor Core GPU is one of the most powerful chips in NVIDIA’s lineup, optimized for deep learning workloads. It offers exceptional performance for both training large neural networks and performing real-time inference tasks. The A100 is equipped with 40GB or 80GB of high-bandwidth memory, providing the processing power required for large-scale AI models like GPT.

The A100’s high throughput, massive memory bandwidth, and multi-instance GPU (MIG) capabilities make it ideal for distributed AI training, allowing multiple models to be trained in parallel, which significantly reduces the time and energy required for training.

NVIDIA DGX Systems

In addition to individual GPUs, OpenAI likely uses NVIDIA DGX systems, which are pre-configured AI supercomputers designed to run deep learning applications at scale. DGX systems are equipped with multiple A100 GPUs, providing the computational resources needed to handle the intensive training requirements of models like GPT.

What Tools Does OpenAI Use?

In addition to advanced hardware, OpenAI also utilizes a range of software tools and AI frameworks to train and deploy its models. These tools are designed to optimize the performance of AI models and make it easier for researchers and engineers to work with massive datasets and complex algorithms.

Some of the tools and technologies OpenAI uses include:

- TensorFlow and PyTorch: These are two of the most popular deep learning frameworks used for training AI models. PyTorch, in particular, is favored by many researchers in the AI field for its flexibility and ease of use. OpenAI uses both frameworks to implement the deep learning algorithms that power its models.

- CUDA: CUDA is NVIDIA’s parallel computing platform and programming model. It is widely used by OpenAI to run machine learning models on NVIDIA GPUs efficiently. CUDA allows OpenAI to leverage the full power of GPUs for training, significantly speeding up the process.

- Distributed Training: OpenAI also employs distributed computing techniques to train models across multiple machines simultaneously. This enables them to scale their training operations and handle the massive data sets involved in training advanced AI models.

- OpenAI Gym: OpenAI has developed OpenAI Gym, a toolkit for developing and comparing reinforcement learning algorithms. This open-source platform allows researchers to train AI models using a variety of simulated environments, which is crucial for training agents in areas like robotics and gaming.

- OpenAI Codex and GPT APIs: OpenAI Codex, the AI that powers GitHub Copilot, and the GPT API are some of the tools OpenAI provides to developers. These tools enable third-party applications to interact with OpenAI’s models, making the power of GPT-3 and Codex available for a wide range of use cases.

Will OpenAI Start Using AMD Chips?

As of now, OpenAI primarily uses NVIDIA chips, and there has been no official announcement from the company about adopting AMD chips in the near future. NVIDIA has long been the preferred choice for AI workloads due to its dominance in the GPU market and its specialized hardware, such as the A100 and H100 GPUs, designed specifically for deep learning.

However, AMD has been making strides in the AI hardware market with its Radeon Instinct MI series and EPYC processors. AMD’s hardware is gaining attention for its performance and cost-effectiveness in certain types of workloads, especially in parallel computing and high-performance computing (HPC). Given the increasing competition in the AI hardware space, it’s possible that OpenAI may explore AMD’s chips in the future for specific use cases, but at the moment, NVIDIA remains the dominant supplier.

Does OpenAI Use NVIDIA Chips?

Yes, OpenAI uses NVIDIA chips, specifically the A100 GPUs, which are a cornerstone of their AI training infrastructure. These chips are integral to OpenAI’s ability to train large-scale models like GPT. The high processing power and large memory capacity of NVIDIA GPUs make them an ideal fit for the computational demands of AI research and development at OpenAI.

In addition to the A100 GPUs, OpenAI’s infrastructure also utilizes NVIDIA DGX systems, which are AI supercomputers designed to scale AI workloads efficiently. These systems, combined with NVIDIA’s CUDA programming model, enable OpenAI to accelerate the training and inference of their AI models.

Conclusion: The Hardware Powering OpenAI’s AI Revolution

OpenAI’s cutting-edge AI models, such as GPT and DALL·E, are powered by some of the most advanced hardware available. The NVIDIA A100 GPUs and DGX systems form the backbone of OpenAI’s infrastructure, providing the massive computational power needed for training and running sophisticated AI models.

While NVIDIA currently dominates OpenAI’s hardware setup, AMD and other competitors are gaining ground in the AI hardware space, with the potential to influence future developments. However, for now, NVIDIA’s specialized GPUs and software tools like CUDA remain the primary choice for OpenAI.

As AI technology continues to advance, the evolution of AI hardware will play a pivotal role in enabling organizations like OpenAI to push the boundaries of what artificial intelligence can achieve. Whether NVIDIA, AMD, or another player emerges as the leading supplier of AI hardware, the future of AI will undoubtedly be shaped by the hardware innovations that support it.