As artificial intelligence (AI) continues to evolve, the need for powerful servers to support AI workloads has never been greater. AI technologies, such as machine learning (ML), deep learning (DL), and data analytics, require significant computational resources, and this is where AI servers come into play. Whether you’re looking to deploy AI training servers to build complex models, or AI inference servers for real-time decision-making, selecting the right infrastructure is essential.

In this article, we will explore various types of AI servers, their costs, and how leading companies like NVIDIA and Google are shaping the AI server landscape. Additionally, we will cover the pricing of AI servers, including AI GPU servers and edge AI servers, helping you make informed decisions about your AI infrastructure needs.

Table of Contents

ToggleWhat is an AI Server?

An AI server is a high-performance computing (HPC) system designed to run AI workloads, including training, inference, and data processing. These servers are typically equipped with powerful GPUs (Graphics Processing Units), which are crucial for accelerating AI tasks that involve massive amounts of parallel processing, such as training deep learning models or running complex simulations.

AI servers differ from traditional servers in that they are optimized for the specific demands of AI applications, offering specialized hardware configurations that maximize processing speed and efficiency. AI servers can be used for tasks like:

- AI Training: Training machine learning models, including deep learning models, requires massive computational power, especially for large datasets. AI servers are equipped with GPUs or TPUs (Tensor Processing Units) to speed up training.

- AI Inference: Once an AI model is trained, it must be deployed to make real-time predictions or decisions based on new input data. Inference servers, typically optimized for low-latency and high-throughput tasks, are responsible for delivering these predictions.

Key Types of AI Servers

- AI Training Servers

AI training servers are designed for training machine learning models on massive datasets. These servers often feature multiple high-performance GPUs, large memory capacities, and fast storage systems to handle the heavy computational requirements. Training deep learning models can take hours, days, or even weeks, depending on the complexity and size of the dataset, so having the right hardware is crucial to minimize time-to-market.

For training AI models, you need a server with the following components:

- Multiple GPUs for parallel processing

- High-bandwidth memory (HBM) for efficient data handling

- Powerful processors (Intel Xeon or AMD EPYC) to support simultaneous tasks

- Large storage solutions for handling massive datasets, such as SSD or NVMe storage

Leading solutions for AI training servers include NVIDIA DGX Systems, Supermicro AI Servers, and Dell PowerEdge AI Servers, all of which offer configurations optimized for large-scale AI training.

- AI Inference Servers

Once AI models are trained, they need to be deployed for real-time decision-making, which is where AI inference servers come in. These servers are optimized to deliver low-latency and high-throughput processing, making them ideal for tasks like natural language processing (NLP), image recognition, and autonomous driving.

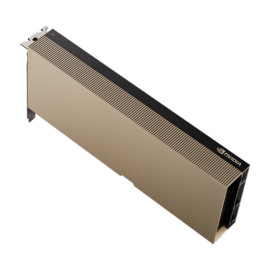

NVIDIA inference servers, such as those powered by the NVIDIA A100 Tensor Core GPUs, are a popular choice for inference tasks. The NVIDIA Triton Inference Server is a software solution that provides optimized inference for AI models across multiple frameworks, such as TensorFlow, PyTorch, and ONNX.

Inference servers typically have the following key features:

- Fast processors for low-latency response

- Efficient GPUs that maximize throughput without needing the massive computational power of training servers

- Scalable design that allows businesses to deploy inference servers across multiple locations or edge devices

- Edge AI Servers

With the growing popularity of edge computing, edge AI servers are becoming a critical part of the AI ecosystem. These servers enable AI processing to occur closer to the data source (e.g., IoT devices or sensors), reducing latency and bandwidth requirements. Edge AI is essential for applications like autonomous vehicles, smart cities, and industrial automation.

Edge AI servers are typically smaller, more power-efficient systems that support AI inference on-site. NVIDIA Jetson and Google Coral are popular solutions for edge AI, offering low-power yet highly efficient AI capabilities for edge devices.

AI Server Price: What Affects the Cost?

The cost of AI servers varies significantly depending on several factors, including the server’s hardware components, scalability, and performance requirements. While it’s difficult to pinpoint a single price for an AI server, we can break down the factors that contribute to AI server pricing:

- GPU/TPU Selection

- The choice of GPU or TPU plays a major role in determining the price of an AI server. GPUs from NVIDIA such as the A100, V100, and H100 are among the most powerful and expensive options. TPUs from Google are also an option for training AI models, particularly in cloud environments.

- Number of GPUs

- A server with multiple GPUs will generally cost more. Servers with 8 GPUs can cost tens of thousands of dollars, while entry-level systems with one or two GPUs may start in the range of $5,000 to $10,000.

- Processor and Memory Configuration

- The CPU selection (Intel or AMD) and memory configuration (RAM and storage) are also key cost factors. High-end CPUs with large memory capacity contribute to higher prices. AI servers may also come with NVMe SSDs for fast data retrieval, further adding to the cost.

- Storage and Networking

- AI servers require high-bandwidth storage systems like SSDs or NVMe drives. Additionally, high-speed networking options such as InfiniBand can increase the cost due to their ability to transfer large datasets efficiently.

- Form Factor and Scalability

- Rackmount servers typically offer better scalability and can support multiple GPUs. These servers are usually more expensive than tower-style servers, which may be more cost-effective for smaller deployments.

How Much Does an AI Server Cost?

While AI server costs vary based on the configuration, here’s a general price breakdown:

- Entry-Level AI Servers: Starting from $5,000 to $10,000 for basic models with a single NVIDIA GPU (e.g., NVIDIA RTX 3090 or A100).

- Mid-Range AI Servers: For servers with 2-4 GPUs, the price can range from $10,000 to $25,000, depending on the GPU and storage configuration.

- High-End AI Servers: Large-scale systems with 8 GPUs or more (such as NVIDIA DGX systems) can cost upwards of $50,000 to $100,000 or more.

Cloud-based solutions, such as Google AI servers in Google Cloud, offer a more flexible pricing model where customers can pay for AI compute resources on-demand, often priced per hour or based on GPU usage.

Google AI Server: Cloud Solutions for AI Workloads

Google AI servers are available through Google Cloud, providing access to powerful GPU and TPU instances without the need for on-premises hardware. Google offers NVIDIA A100, V100, and T4 GPUs for AI training and inference, as well as Google TPUs, which are specifically designed for machine learning workloads.

Google’s AI server offerings provide scalability, flexibility, and access to cutting-edge infrastructure, making it easier for organizations to run AI workloads in the cloud. Pricing for Google AI servers depends on the instance type, the number of GPUs/TPUs, and the duration of usage, making it a cost-effective option for businesses that need scalable AI infrastructure without the upfront costs of building their own servers.

Conclusion: Choosing the Right AI Server

When selecting an AI server, it’s important to consider your specific workload requirements, including whether you need a training server, an inference server, or an edge AI server. The right server for your AI projects will depend on factors such as the size of your AI models, the volume of data you need to process, and your budget.

While the initial investment in AI servers can be high, especially for GPU-powered servers or cloud-based AI solutions, the performance and capabilities they unlock are invaluable for organizations looking to leverage AI at scale. With the rise of powerful cloud solutions like Google AI servers, the accessibility and affordability of AI infrastructure are becoming more manageable, allowing even smaller businesses to tap into the full potential of AI technology.

Ultimately, whether you’re building your own infrastructure or opting for cloud-based solutions, AI servers are the backbone of AI innovation, enabling faster development, deployment, and scaling of AI-powered applications.