NVIDIA A30 24GB HBM2 Memory Ampere GPU Tesla Data Center Accelerator

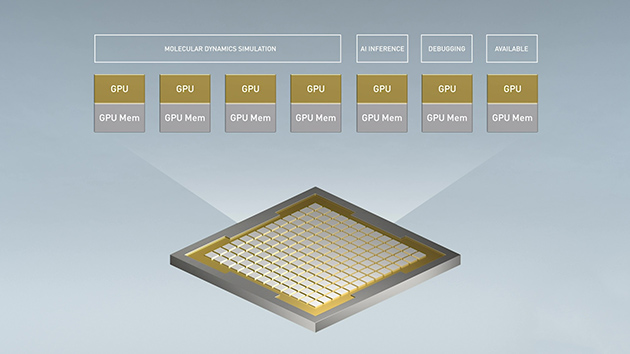

Enterprise-Ready Utilization

| FP64 | 5.2 teraFLOPS |

| FP64 Tensor Core | 10.3 teraFLOPS |

| FP32 | 10.3 teraFLOPS |

| TF32 Tensor Core | 82 teraFLOPS | 165 teraFLOPS* |

| BFLOAT16 Tensor Core | 165 teraFLOPS | 330 teraFLOPS* |

| FP16 Tensor Core | 165 teraFLOPS | 330 teraFLOPS* |

| INT8 Tensor Core | 330 TOPS | 661 TOPS* |

| INT4 Tensor Core | 661 TOPS | 1321 TOPS* |

| Media engines | 1 optical flow accelerator (OFA) 1 JPEG decoder (NVJPEG) 4 video decoders (NVDEC) |

| GPU memory | 24GB HBM2 |

| GPU memory bandwidth | 933GB/s |

| Interconnect | PCIe Gen4: 64GB/s Third-gen NVLINK: 200GB/s** |

| Form factors | Dual-slot, full-height, full-length (FHFL) |

| Max thermal design power (TDP) | 165W |

| Multi-Instance GPU (MIG) | 4 GPU instances @ 6GB each 2 GPU instances @ 12GB each 1 GPU instance @ 24GB |

| Virtual GPU (vGPU) software support | NVIDIA AI Enterprise for VMware NVIDIA Virtual Compute Server |