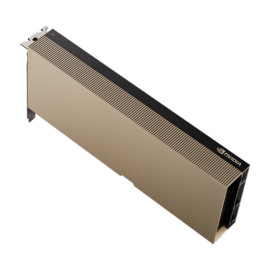

The NVIDIA H100 Tensor Core GPU is the flagship product of NVIDIA’s Hopper architecture, designed to meet the ever-growing demands of artificial intelligence (AI), high-performance computing (HPC), and deep learning workloads. As an evolutionary step forward from its predecessors, the NVIDIA H100 sets new standards in performance, scalability, and efficiency for AI and machine learning tasks. In this article, we will explore the architecture, features, and benefits of the H100 GPU, and why it is a game-changer for data centers and research institutions.

Table of Contents

ToggleOverview of NVIDIA H100

The NVIDIA H100 is part of NVIDIA’s latest Hopper architecture, which was unveiled as the successor to the Ampere architecture. While the A100 Tensor Core GPU set high standards in AI processing, the H100 takes performance even further, enabling more complex models and faster computation. It offers increased efficiency and versatility, particularly for large-scale AI workloads, simulations, and data analytics.

Key Features of the NVIDIA H100

1. Fourth-Generation Tensor Cores

Tensor Cores are NVIDIA’s unique processors optimized for deep learning tasks, such as matrix multiplications. The H100 introduces fourth-generation Tensor Cores, providing significant improvements in floating-point (FP) performance and mixed-precision computing. This is critical for AI model training, where vast amounts of data need to be processed rapidly and efficiently. These cores support FP8 precision, enabling faster training times while preserving accuracy.

2. Hopper Architecture

Named after computer science pioneer Grace Hopper, the Hopper architecture brings forward several advancements tailored for the next wave of AI breakthroughs. The H100 is built on the NVIDIA 4N process (5nm TSMC), which allows more transistors to be packed into the GPU for enhanced speed, efficiency, and scalability. With innovations like a new Transformer Engine and the support for massive multi-instance GPU (MIG) partitioning, the Hopper architecture is a step toward more scalable and flexible AI workloads.

3. Transformer Engine

The Transformer Engine is a specialized component of the H100, aimed at accelerating the training and inference of transformer models, which are central to modern natural language processing (NLP) tasks. It works by dynamically adjusting between FP8 and FP16 precision to maximize efficiency in tasks like large language model (LLM) training. The Transformer Engine can deliver up to 6x speedup compared to previous-generation GPUs when dealing with transformers.

4. Multi-Instance GPU (MIG) Support

MIG is a feature that allows a single H100 GPU to be partitioned into seven smaller, fully isolated GPU instances. This means that a data center can serve multiple users and applications on the same GPU without performance degradation or security risks. This is a particularly useful feature for cloud service providers and enterprises running diverse workloads on shared infrastructure.

5. NVLink and NVSwitch for Unprecedented Scalability

The H100 supports NVLink and NVSwitch, NVIDIA’s high-bandwidth interconnect technologies that enable multiple GPUs to work together seamlessly. This is especially crucial in large-scale AI training, where models can span across hundreds or thousands of GPUs. NVLink provides up to 900 GB/s of bandwidth, while NVSwitch enables seamless communication between GPUs, ensuring that large models are not bottlenecked by data transfer speeds.

6. Enhanced Security Features

With AI and HPC workloads increasingly handling sensitive data, security has become a top priority. The H100 introduces confidential computing, a feature that protects data during processing by isolating it from external threats. This is particularly valuable for industries such as healthcare and finance, where data privacy is critical.

Performance Benchmarks

The NVIDIA H100 GPU is designed to deliver dramatic improvements over the previous generation. Here are some key performance metrics:

- FP8 Tensor Core Performance: Up to 1,000 TFLOPS (1 PFLOP) of FP8 tensor performance, which is critical for training large AI models.

- FP16 Tensor Core Performance: Up to 500 TFLOPS, providing a significant boost for high-precision AI workloads.

- BF16/TF32 Tensor Core Performance: Supports up to 500 TFLOPS for mixed-precision AI workloads, ideal for inferencing tasks.

- INT8 Tensor Core Performance: Up to 2,000 TOPS (Tera Operations per Second), optimized for inference and real-time AI applications.

Use Cases for NVIDIA H100

1. AI Model Training and Inference

With its exceptional performance in FP8 and FP16 precision, the H100 is tailored for training massive AI models, including transformers used in NLP, computer vision, and generative models. The GPU’s architecture is designed to minimize bottlenecks during both training and inference, allowing researchers and enterprises to experiment with larger and more complex models than ever before.

2. High-Performance Computing (HPC)

For industries like aerospace, pharmaceuticals, and weather forecasting, the H100’s performance in FP64 computing makes it a valuable tool for simulations and large-scale computations. Its ability to support multi-GPU systems through NVLink and NVSwitch ensures that complex simulations can be processed without bottlenecks.

3. Data Analytics and Machine Learning

The H100’s increased bandwidth and MIG support make it ideal for large-scale data analytics and machine learning tasks. Organizations can partition the GPU to handle multiple workloads simultaneously, ensuring efficient resource allocation and faster time-to-insight.

4. Cloud and Edge Computing

Cloud service providers can leverage the H100’s MIG capabilities to offer GPU-accelerated services to multiple tenants, while edge computing applications can benefit from the H100’s high-performance inference capabilities, especially in real-time AI tasks.

Conclusion

The NVIDIA H100 Tensor Core GPU is a transformative leap in GPU technology, offering unprecedented performance and flexibility for AI, HPC, and data analytics. Its Hopper architecture, combined with fourth-generation Tensor Cores, the Transformer Engine, and advanced security features, make it an ideal solution for enterprises looking to push the boundaries of AI research and large-scale computing. As AI models grow more complex and datasets larger, the H100 will continue to be a critical component in the future of machine learning, deep learning, and high-performance computing.

Whether you are a researcher, a cloud service provider, or an enterprise looking to scale your AI infrastructure, the NVIDIA H100 is a powerful tool that promises to shape the future of AI and beyond.