The Volta architecture, released by NVIDIA in 2017, introduced Tensor Cores specifically optimized for deep learning, providing enhanced computational performance. Each subsequent GPU architecture has been optimized over the previous generation, continually driving technological advancements in AI and HPC to meet the ever-growing computational demands, especially for large-scale deep learning models and high-performance computing tasks. In this article, we’ll explore the list of NVIDIA AI chips from each architecture, in reverse order of their release, focusing on the various GPUs available for AI workloads, and discuss the NVIDIA AI chip price.

Table of Contents

ToggleWhat Are NVIDIA AI Chips?

NVIDIA AI chips are specialized hardware designed to accelerate AI, deep learning, and machine learning workloads. These chips use the power of parallel processing to efficiently train large models, process complex data, and run AI inference tasks. NVIDIA has developed a range of GPUs optimized for these purposes, and they are widely used in data centers, AI research, and enterprise environments.

NVIDIA AI chips are built on architectures like Volta, Ampere, and the newer Hopper architecture, all of which are engineered to maximize performance in AI-related tasks such as neural network training, large-scale data processing, and high-performance computing (HPC).

Release Year of GPU Architectures

- Blackwell (2025, the GPU focused on AI training under this architecture has not been released yet.)

- Hopper (2022)

- Ada Lovelace(2022)

- Ampere (2020)

- Turing (2018)

- Volta (2017)

NVIDIA AI Chips List: Top 13 GPUs for AI Workloads

NVIDIA offers a variety of AI-optimized GPUs, each designed for specific use cases and performance needs. Here’s a breakdown of the most popular and powerful NVIDIA AI chips used in AI and machine learning applications:

1. NVIDIA H200 GPU(Hopper Architecture)

The NVIDIA H200 GPU, powered by the groundbreaking Hopper architecture, offers a dramatic leap in AI performance. With increased CUDA cores and higher memory bandwidth, the H200 is engineered to tackle the most complex AI models, machine learning, and high-performance computing applications. This GPU is set to drive advancements in fields like deep learning, autonomous systems, and scientific simulations.

Technical Specifications sourced from NVIDIA official site:

| Technical Specifications | H200 SXM | H200 NVL |

| FP64 | 34 TFLOPS | 30 TFLOPS |

| FP64 Tensor Core | 67 TFLOPS | 60 TFLOPS |

| FP32 | 67 TFLOPS | 60 TFLOPS |

| TF32 Tensor Core* | 989 TFLOPS | 835 TFLOPS |

| BFLOAT16 Tensor Core* | 1,979 TFLOPS | 1,671 TFLOPS |

| FP16 Tensor Core* | 1,979 TFLOPS | 1,671 TFLOPS |

| FP8 Tensor Core* | 3,958 TFLOPS | 3,341 TFLOPS |

| INT8 Tensor Core* | 3,958 TFLOPS | 3,341 TFLOPS |

| GPU Memory | 141GB | 141GB |

| GPU Memory Bandwidth | 4.8TB/s | 4.8TB/s |

| Decoders | 7 NVDEC, 7 JPEG | 7 NVDEC, 7 JPEG |

| Confidential Computing | Supported | Supported |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) | Up to 600W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGs @ 18GB each | Up to 7 MIGs @ 16.5GB each |

| Form Factor | SXM | PCIe |

| Interconnect | NVIDIA NVLink: 900GB/s, PCIe Gen5: 128GB/s | 2- or 4-way NVIDIA NVLink bridge: 900GB/s per GPU, PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX™ H200 partner and NVIDIA-AI Certified Systems™ with 4 or 8 GPUs | NVIDIA MGX™ H200 NVL partner and NVIDIA-Certified Systems with up to 8 GPUs |

| NVIDIA AI Enterprise | Add-on | Included |

Note: The asterisk (*) indicates specifications with sparsity

2. NVIDIA H100 GPU (Hopper Architecture)

The NVIDIA H100 GPU, based on the latest Hopper architecture, takes AI performance to new heights. With more Tensor Cores and cutting-edge memory technology, it’s designed for the most demanding AI tasks, including large-scale model training, AI research, and data analysis. The H100 is expected to push the limits of AI innovation in fields such as autonomous driving, medical research, and more.

Technical Specifications sourced from NVIDIA official site:

| Technical Specifications | H100 SXM | H100 NVL |

| FP64 | 34 teraFLOPS | 30 teraFLOPS |

| FP64 Tensor Core | 67 teraFLOPS | 60 teraFLOPS |

| FP32 | 67 teraFLOPS | 60 teraFLOPS |

| TF32 Tensor Core* | 989 teraFLOPS | 835 teraFLOPS |

| BFLOAT16 Tensor Core* | 1,979 teraFLOPS | 1,671 teraFLOPS |

| FP16 Tensor Core* | 1,979 teraFLOPS | 1,671 teraFLOPS |

| FP8 Tensor Core* | 3,958 teraFLOPS | 3,341 teraFLOPS |

| INT8 Tensor Core* | 3,958 TOPS | 3,341 TOPS |

| GPU Memory | 80GB | 94GB |

| GPU Memory Bandwidth | 3.35TB/s | 3.9TB/s |

| Decoders | 7 NVDEC, 7 JPEG | 7 NVDEC, 7 JPEG |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) | 350-400W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGs @ 10GB each | Up to 7 MIGs @ 12GB each |

| Form Factor | SXM | PCIe dual-slot air-cooled |

| Interconnect | NVIDIA NVLink: 900GB/s, PCIe Gen5: 128GB/s | NVIDIA NVLink: 600GB/s, PCIe Gen5: 128GB/s |

| Server Options | NVIDIA HGX H100 Partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs | Partner and NVIDIA-Certified Systems with 1–8 GPUs |

| NVIDIA Enterprise | Add-on | Included |

Note: The asterisk (*) indicates specifications with sparsity

3. NVIDIA L4 Tensor Core GPU (Ada Lovelace Architecture)

The NVIDIA L4 Tensor Core GPU, built on the Ada Lovelace architecture, is optimized for AI inference and real-time workloads. With a significant number of Tensor Cores and high-speed memory, this GPU provides exceptional performance for tasks like AI model inference, autonomous vehicles, and AI-powered applications. The L4 GPU is perfect for businesses looking to leverage AI for rapid decision-making and deployment at scale.

Technical Specifications sourced from NVIDIA official site:

| Specifications | |

| FP32 | 30.3 teraFLOPS |

| TF32 Tensor Core | 120 teraFLOPS* |

| FP16 Tensor Core | 242 teraFLOPS* |

| BFLOAT16 Tensor Core | 242 teraFLOPS* |

| FP8 Tensor Core | 485 teraFLOPS* |

| INT8 Tensor Core | 485 TOPS* |

| GPU Memory | 24GB |

| GPU Memory Bandwidth | 300 GB/s |

| NVENC | NVDEC | JPEG Decoders | 2 | 4 | 4 |

| Max Thermal Design Power (TDP) | 72W |

| Form Factor | 1-slot low-profile, PCIe |

| Interconnect | PCIe Gen4 x16 64GB/s |

| Server Options | Partner and NVIDIA-Certified Systems with 1–8 GPUs |

Note: The asterisk (*) indicates specifications with sparsity

4. NVIDIA L40S GPU (Ada Lovelace Architecture)

The NVIDIA L40S GPU combines Ada Lovelace architecture with optimized memory to deliver outstanding performance for a wide range of AI, data analytics, and rendering applications. Whether for deep learning model training or inference tasks, the L40S excels with its substantial number of Tensor Cores, empowering industries to make significant advancements in AI-driven technologies.

Technical Specifications sourced from NVIDIA official site:

| Specification | |

| GPU Architecture | NVIDIA Ada Lovelace Architecture |

| GPU Memory | 480B GDDR6 with ECC |

| Memory Bandwidth | 864GB/s |

| Interconnect Interface | PCIe Gen4 x16: 64GB/s bidirectional |

| CUDA Cores | 18176 |

| Third-Generation RT Cores | 142 |

| Fourth-Generation Tensor Cores | 568 |

| RT Core Performance (TFLOPS) | 209 |

| FP32 TFLOPS | 91.6 |

| TF32 Tensor Core TFLOPS | 1,831,366* |

| BFLOAT16 Tensor Core TFLOPS | 362.05 / 1,733* |

| FP16 Tensor Core TFLOPS | 362.05 / 1,733* |

| FP8 Tensor Core TFLOPS | 733 / 11,466* |

| Peak INT8 Tensor TOPS | 733 / 11,466* |

| Peak INT4 Tensor TOPS | 733 / 11,466* |

| Form Factor | 4.4″ (H) x 10.5″ (L), dual slot |

| Display Ports | 4x DisplayPort 1.4a |

| Max Power Consumption | 350W |

| Power Connector | 16-pin |

| Thermal | Passive |

| Virtual GPU (vGPU) Software Support | Yes |

| vGPU Profiles Supported | See the virtual GPU licensing guide |

| NVENC/NVDEC | 3x / 13x (includes AVI encode and decode) |

| Secure Boot With Root of Trust | Yes |

| NEBS Ready | Level 3 |

| MIG Support | No |

| NVIDIA NVLink Support | No |

| Sparsity Support | Yes* |

Note: The asterisk (*) indicates specifications with sparsity

5.NVIDIA L40 GPU (Ada Lovelace Architecture)

With the NVIDIA L40 GPU, designed for intensive AI workloads, professionals can expect high performance for training and inference tasks. This GPU is equipped with powerful Tensor Cores and high-memory bandwidth, making it ideal for applications like computer vision, machine learning, and complex simulations. It’s a perfect fit for companies looking to push the boundaries of AI and automation.

Technical Specifications sourced from NVIDIA official site:

| Technical Specifications | NVIDIA L40* |

| GPU Architecture | NVIDIA Ada Lovelace architecture |

| GPU Memory | 48GB GDDR6 with ECC |

| Memory Bandwidth | 864GB/s |

| Interconnect Interface | PCIe Gen4x16: 64GB/s bi-directional |

| CUDA Cores | 18,176 |

| RT Cores | 142 |

| Tensor Cores | 568 |

| RT Core performance TFLOPS | 209 |

| FP32 TFLOPS | 90.5 |

| TF32 Tensor Core TFLOPS | 90.5 |

| BFLOAT16 Tensor Core TFLOPS | 181.05 |

| FP16 Tensor Core | 181.05 |

| FP8 Tensor Core | 362 |

| Peak INT8 Tensor TOPS | 362 |

| Peak INT4 Tensor TOPS | 724 |

| Form Factor | 4.4″ (H) x 10.5″ (L) – dual slot |

| Display Ports | 4 x DisplayPort 1.4a |

| Max Power Consumption | 300W |

| Power Connector | 16-pin |

| Thermal | Passive |

| Virtual GPU (vGPU) software support | Yes |

| vGPU Profiles Supported | See Virtual GPU Licensing Guide† |

| NVENC I NVDEC | 3x I 3x (Includes AV1 Encode & Decode) |

| Secure Boot with Root of Trust | Yes |

| NEBS Ready | Level 3 |

| MIG Support | No |

| NVLink Support | No |

* Preliminary specifications, subject to change

** With Sparsity

† Coming in a future release of NVIDIA vGPU software.

6. NVIDIA A100 GPU (Ampere Architecture)

The NVIDIA A100 GPU is built for high-performance AI workloads, including deep learning, training, and inference. It offers significantly higher performance than the V100, particularly in terms of AI and machine learning tasks, thanks to its advanced Tensor Cores. It’s widely used in AI data centers, research labs, and by enterprises looking to scale their AI infrastructure.

Technical Specifications sourced from NVIDIA official site:

| NVIDIA A100 Tensor Core GPU Specifications (SXM4 and PCIe Form Factors) | A100 80GB PCIe | A100 80GB SXM |

| FP64 | 9.7 TFLOPS | 9.7 TFLOPS |

| FP64 Tensor Core | 19.5 TFLOPS | 19.5 TFLOPS |

| FP32 | 19.5 TFLOPS | 19.5 TFLOPS |

| Tensor Float 32 (TF32) | 156 TFLOPS | 312 TFLOPS* |

| BFLOAT16 Tensor Core | 312 TFLOPS | 624 TFLOPS* |

| FP16 Tensor Core | 312 TFLOPS | 624 TFLOPS* |

| INT8 Tensor Core | 624 TOPS | 1248 TOPS* |

| GPU Memory | 80GB HBM2e | 80GB HBM2e |

| GPU Memory Bandwidth | 1,935GB/s | 2,039GB/s |

| Max Thermal Design Power (TDP) | 300W | 400W*** |

| Multi-Instance GPU | Up to 7 MIGs @ 10GB | Up to 7 MIGs @ 10GB |

| Form Factor | PCIe dual-slot air cooled or single-slot liquid cooled | SXM |

| Interconnect | NVIDIA® NVLink® Bridge for 2 GPUs: 600GB/s ** PCIe Gen4: 64GB/s | NVLink: 600GB/s PCIe Gen4: 64GB/s |

| Server Options | Partner and NVIDIA-Certified Systems™ with 1-8 GPUs | NVIDIA HGX™ A100-Partner and NVIDIA-Certified Systems with 4, 8, or 16 GPUs NVIDIA DGX™ A100 with 8 GPUs |

*With sparsity

** SXM4 GPUs via HGX A100 server boards; PCIe GPUs via NVLink Bridge for up to two GPUs

*** 400W TDP for standard configuration. HGX A100-80GB CTS (Custom Thermal Solution) SKU can support TDPs up to 500W

7. NVIDIA A2 Tensor Core GPU (Ampere Architecture)

The NVIDIA A2 Tensor Core GPU, based on the Ampere architecture, provides an entry-level yet powerful option for AI and machine learning workloads. Its efficiency and compact form factor make it a great choice for scalable, cost-effective AI deployments, including edge computing, AI inference, and data processing. The A2 is perfect for organizations looking to integrate AI without sacrificing performance.

Technical Specifications sourced from NVIDIA official site:

| Peak FP32 | 4.5 TF |

| TF32 Tensor Core | 9 TF|18 TF* |

| BFLOAT16 Tensor Core | 18 TF|36 TF* |

| Peak FP16 Tensor Core | 18 TF|36 TF* |

| Peak INT8 Tensor Core | 36 TOPS|72 TF* |

| Peak INT4 Tensor Core | 72 TOPS|144 TF* |

| RT Cores | 10 |

| Media engines | 1 video encoder 2 video decoders (includes AV1 decode) |

| GPU memory | 16GB GDDR6 |

| GPU memory bandwidth | 200GB/s |

| Interconnect | PCIe Gen4 x8 |

| Form factor | 1-slot, Low-Profile PCIe |

| Max thermal design power (TDP) | 40-60W (Configurable) |

| vGPU software support | NVIDIA Virtual PC (vPC), NVIDIA Virtual Applications (vApps), NVIDIA RTX Virtual Workstation (vWS), NVIDIA AI Enterprise, NVIDIA Virtual Compute Server (vCS) |

*With sparsity

8. NVIDIA A30 Tensor Core GPU (Ampere Architecture)

The NVIDIA A30 GPU, utilizing the Ampere architecture, is built to handle complex AI workloads with ease. Whether for training deep learning models or running large-scale inference tasks, the A30 offers unmatched performance with its large number of CUDA cores and Tensor Cores. It’s designed to meet the needs of businesses looking to accelerate AI-driven innovation in fields such as healthcare, finance, and autonomous systems.

Technical Specifications sourced from NVIDIA official site:

| Peak FP64 | 5.2 TF |

| Peak FP64 Tensor Core | 10.3 TF |

| Peak FP32 | 10.3 TF |

| TF32 Tensor Core | 82 TF|165 TF* |

| BFLOAT16 Tensor Core | 165 TF|330 TF* |

| Peak FP16 Tensor Core | 165 TF|330 TF* |

| Peak INT8 Tensor Core | 330 TOPS|661 TOPS* |

| Peak INT4 Tensor Core | 661 TOPS|1321 TOPS* |

| Media engines | 1 optical flow accelerator (OFA) 1 JPEG decoder (NVJPEG) 4 video decoders (NVDEC) |

| GPU Memory | 24GB HBM2 |

| GPU Memory Bandwidth | 933GB/s |

| Interconnect | PCIe Gen4: 66GB/s Third-gen NVIDIA® NVLINK® 200GB/s** |

| Form Factor | 2-slot, full height, full length (FHFL) |

| Max thermal design power (TDP) | 165W |

| Multi-Instance GPU (MIG) | 4 MIGs @ 6GB each 2 MIGs @ 12GB each 1 MIG @ 24GB |

| Virtual GPU (vGPU) software support | NVIDIA AI Enterprise NVIDIA Virtual Compute Server |

*With sparsity

** NVLink Bridge for up to two GPUs.

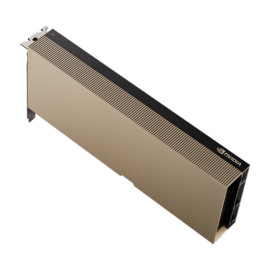

9. NVIDIA A40 GPU (Ampere Architecture)

The NVIDIA A40 GPU, built on the Ampere architecture, delivers powerful performance for high-performance computing and AI-driven applications. With its abundant CUDA cores and ample memory, the A40 excels at training deep learning models, data analytics, and AI inference workloads. Designed for data centers, the A40 offers an ideal balance of performance and power efficiency, making it a solid choice for enterprises looking to scale their AI operations. The A40 is perfect for industries like healthcare, automotive, and scientific research, where robust AI solutions are critical for innovation.

Technical Specifications sourced from NVIDIA official site:

| SPECIFICATIONS | Details |

| GPU architecture | NVIDIA Ampere architecture |

| GPU memory | 48 GB GDDR6 with ECC |

| Memory bandwidth | 696 GB/s |

| Interconnect interface | NVIDIA® NVLink® 112.5 GB/s (bidirectional) PCIe Gen4: 64GB/s |

| CUDA Cores | 10,752 |

| RT Cores | 84 |

| Tensor Cores | 336 |

| Peak FP32 TFLOPS (non-Tensor) | 37.4 |

| Peak FP16 Tensor TFLOPS with FP16 Accumulate | 149.7 | 299.4* |

| Peak TF32 Tensor TFLOPS | 74.8 | 149.6* |

| RT Core performance TFLOPS | 73.1 |

| Peak BF16 Tensor TFLOPS with FP32 Accumulate | 149.7 | 299.4* |

| Peak INT8 Tensor TOPS | 299.3 | 598.6* |

| Peak INT4 Tensor TOPS | 598.7 | 1,197.4* |

| Form factor | 4.4″ [H] x 10.5″ [L] dual slot |

| Display ports | 3x DisplayPort 1.4**; Supports NVIDIA Mosaic and Quadro® Sync4 |

| Max power consumption | 300 W |

| Power connector | 8-pin CPU |

| Thermal solution | Passive |

| Virtual GPU (vGPU) software support | NVIDIA vPC/vApps, NVIDIA RTX Virtual Workstation, NVIDIA Virtual Compute Server |

| vGPU profiles supported | See the Virtual GPU Licensing Guide |

| NVENC | NVDEC | 1x | 2x (includes AV1 decode) |

| Secure and measured boot with hardware root of trust | Yes (optional) |

| NEBS ready | Level 3 |

| Compute APIs | CUDA, DirectCompute, OpenCL™, OpenACC® |

| Graphics APIs | DirectX 12.0†, Shader Model 5.1†, OpenGL 4.6†, Vulkan 1.1† |

| MIG support | No |

*Structural sparsity enabled.

** A40 is configured for virtualization by default with physical display connectors disabled. The display outputs can be enabled via management software tools.

10. NVIDIA A16 Tensor Core GPU (Ampere Architecture)

The NVIDIA A16 GPU, based on the Ampere architecture, strikes the perfect balance between performance and cost-efficiency. It’s designed to accelerate AI workloads such as deep learning model inference and real-time data analytics. With its substantial memory and Tensor Cores, the A16 is an ideal choice for companies aiming to deploy AI at scale in a data center environment.

Technical Specifications sourced from NVIDIA official site:

| SPECIFICATIONS | Details |

| GPU Architecture | NVIDIA Ampere architecture |

| GPU memory | 4x 16 GB GDDR6 |

| Memory bandwidth | 4x 200 GB/s |

| Error-correcting code (ECC) | Yes |

| CUDA Cores | 4x 1280 |

| Tensor Cores | 4x 40 |

| RT Cores | 4x 10 |

| FP32 | TF32 (TFLOPS) | 4x 4.5 | 4x 9 | 4x 18 |

| FP16 (TFLOPS) | 4x 17.9 | 4x 35.9 |

| INT8 (TOPS) | 4x 35.9 | 4x 71.8 |

| System interface | PCIe Gen4 (x16) |

| Max power consumption | 250W |

| Thermal solution | Passive |

| Form factor | Full height, full length (FHFL) Dual Slot |

| Power connector | 8-pin CPU |

| Encode/decode engines | 4 NVENC/8 NVDEC (includes AV1 decode) |

| Secure and measured boot with hardware root of trust for GPU | Yes (optional) |

| vGPU software support | NVIDIA Virtual PC (vPC), NVIDIA Virtual Applications (vApps), |

| NVIDIA RTX Virtual Workstation (vWS), NVIDIA AI Enterprise, | |

| NVIDIA Virtual Compute Server (vCS) | |

| Graphics APIs | DirectX 12.07², Shader Model 5.17², OpenGL 4.68² Vulkan 1.18² |

| Compute APIs | CUDA, DirectCompute, OpenCL™, OpenACC® |

| MIG support | No |

11. NVIDIA A10 Tensor Core GPU (Ampere Architecture)

The NVIDIA A10 Tensor Core GPU, designed with the Ampere architecture, is optimized for a variety of AI and data center workloads. With substantial memory and Tensor Cores, it is ideal for both training and inference tasks. It’s well-suited for industries like healthcare, finance, and retail, where AI-powered insights and real-time data processing are crucial.

Technical Specifications sourced from NVIDIA official site:

| SPECIFICATIONS | Details |

| FP32 | 31.2 TF |

| TF32 Tensor Core | 62.5 TF | 125 TF* |

| BFLOAT16 Tensor Core | 125 TF | 250 TF* |

| FP16 Tensor Core | 125 TF | 250 TF* |

| INT8 Tensor Core | 250 TOPS | 500 TOPS* |

| INT4 Tensor Core | 500 TOPS | 1000 TOPS* |

| RT Cores | 72 |

| Encode / Decode | 1 encoder 1 decoder (+AV1 decode) |

| GPU Memory | 24 GB GDDR6 |

| GPU Memory Bandwidth | 600 GB/s |

| Interconnect | PCIe Gen4: 64 GB/s |

| Form Factor | 1-slot FHFL |

| Max TDP Power | 150W |

| vGPU Software Support | NVIDIA vPC/vApps, NVIDIA RTX™ vWS, NVIDIA Virtual Compute Server (vCS) |

| Secure and Measured Boot with Hardware Root of Trust | Yes |

| NEBS Ready | Level 3 |

| Power Connector | PEX 8-pin |

*with sparsity

12. NVIDIA T4 GPU (Turing Architecture)

The NVIDIA T4 GPU is a more budget-friendly option for AI tasks like inference, especially when deployed at scale. It’s commonly used in cloud services and data centers to provide real-time inference capabilities for AI applications. While it doesn’t have the same performance as the A100 or V100, the T4 is an excellent choice for companies that need to deploy AI models efficiently and cost-effectively.

Technical Specifications sourced from NVIDIA official site:

| GPU Architecture | NVIDIA Turing |

| NVIDIA Turing Tensor Cores | 320 |

| NVIDIA CUDA® Cores | 2,560 |

| Single-Precision | 8.1 TFLOPS |

| Mixed-Precision (FP16/FP32) | 65 TFLOPS |

| INT8 | 130 TOPS |

| INT4 | 260 TOPS |

| GPU Memory | 16 GB GDDR6 |

| GPU Memory Bandwidth | 300 GB/sec |

| ECC | Yes |

| Interconnect Bandwidth | 32 GB/sec |

| System Interface | x16 PCIe Gen3 |

| Form Factor | Low-Profile PCIe |

| Thermal Solution | Passive |

| Compute APIs | CUDA, NVIDIA TensorRT™, ONNX |

13. NVIDIA V100 GPU (Volta Architecture)

The NVIDIA V100 GPU, powered by the Volta architecture, is engineered for high-performance computing, deep learning, and AI workloads. Featuring NVIDIA’s revolutionary Tensor Core technology, the V100 delivers massive acceleration for training and inference of deep learning models, dramatically improving performance on large datasets.

Technical Specifications sourced from NVIDIA official site:

| Specifications | V100 PCIe | V100 SXM2 | V100S PCIe |

| GPU Architecture | NVIDIA Volta | NVIDIA Volta | NVIDIA Volta |

| NVIDIA Tensor Cores | 640 | 640 | 640 |

| NVIDIA CUDA® Cores | 5120 | 5120 | 5120 |

| Double-Precision Performance | 7 TFLOPS | 7.8 TFLOPS | 8.2 TFLOPS |

| Single-Precision Performance | 14 TFLOPS | 15.7 TFLOPS | 16.4 TFLOPS |

| Tensor Performance | 112 TFLOPS | 125 TFLOPS | 130 TFLOPS |

| GPU Memory | 32 GB / 16 GB HBM2 | 32 GB HBM2 | 32 GB HBM2 |

| Memory Bandwidth | 900 GB/sec | 1134 GB/sec | 1134 GB/sec |

| ECC | Yes | Yes | Yes |

| Interconnect Bandwidth | 32 GB/sec | 300 GB/sec | 32 GB/sec |

| System Interface | PCIe Gen3 | NVIDIA NVLink™ | PCIe Gen3 |

| Form Factor | PCIe Full Height/Length | SXM2 | PCIe Full Height/Length |

| Max Power Consumption | 250 W | 300 W | 250 W |

| Thermal Solution | Passive | Passive | Passive |

| Compute APIs | CUDA, DirectCompute, OpenCL™, OpenACC® | CUDA, DirectCompute, OpenCL™, OpenACC® | CUDA, DirectCompute, OpenCL™, OpenACC® |

14. GeForce RTX high-performance series GPUs

The GeForce RTX high-performance series GPUs (such as the RTX 3090, RTX 4080, RTX 4090, RTX 5090, etc.) are primarily aimed at the gaming market but still offer sufficient CUDA cores and good memory bandwidth, making them suitable for entry-level or mid-range AI model training for projects. These GPUs support ray tracing technology, making them ideal for both graphic rendering and AI training. You can find the technical specifications for these GPUs at https://www.techpowerup.com/gpu-specs/.

NVIDIA AI Chip Price

The price of NVIDIA AI chips can vary widely depending on the model, memory configuration, and the retailer. Below is an approximate price range for some of the most popular AI GPUs:

- NVIDIA A100 GPU: The A100 is a high-end GPU and can range between $11,000 to $15,000, depending on memory configuration (40GB or 80GB).

- NVIDIA H100 GPU: As one of the newest and most powerful AI GPUs, the H100 can cost upwards of $20,000 or more, with pricing fluctuating based on availability and market demand.

- NVIDIA T4 GPU: The T4 is one of the more affordable AI GPUs, typically priced around $2,500 to $4,000, depending on the retailer.

- NVIDIA H200 GPU: The H200 is an advanced AI and machine learning GPU, offering top-tier performance. Pricing for the H200 starts at around $22,000, with variations depending on the specific memory configuration and market conditions.

- NVIDIA L4 GPU: The L4 is designed for data centers, offering a balance of performance and affordability. Typically, the L4 is priced between $7,000 and $9,000, with the cost influenced by memory specifications and demand.

- NVIDIA L40S GPU: The L40S is a high-performance GPU tailored for workloads like deep learning and data analytics. Its pricing generally falls between $8,000 and $12,000, with the final price varying based on the memory size and other features.

- NVIDIA L40 GPU: The L40 provides exceptional AI capabilities for enterprise and cloud-based solutions. The cost of the L40 is usually around $12,000 to $16,000, depending on the configuration and current market prices.

- NVIDIA A2 GPU: The A2 is a more budget-friendly option for AI acceleration, designed for edge AI and machine learning applications. Prices typically range from $2,000 to $3,000, with minor fluctuations depending on retailer and stock availability.

- NVIDIA A30 GPU: The A30 offers solid performance for large-scale AI deployments and high-performance computing. It’s priced around $6,000 to $8,000, with price variations based on specific memory configurations and the broader market trend.

- NVIDIA A40 GPU: The A40 is a professional-grade GPU designed for AI workloads, data centers, and virtual workstations. Its price typically ranges from $5,000 to $7,500, depending on the memory configuration and market conditions.

- NVIDIA A10 GPU: The A10 offers excellent performance for mid-range AI applications and graphics workloads. It generally costs between $3,500 to $5,000, with variations depending on memory capacity and availability.

- NVIDIA A16 GPU: The A16 is optimized for multi-user virtual desktops and cloud-based workloads. This GPU is more budget-friendly, priced around $2,000 to $3,000, depending on the retailer and specific configuration options.

These prices reflect the high-performance nature of NVIDIA AI chips, but it’s important to remember that they can fluctuate based on the retail market, especially in the face of high demand for AI hardware.

Where to Buy NVIDIA AI Chips

If you’re interested in purchasing NVIDIA AI chips, you can purchase from us, get quote now>. You can also find them through a variety of other online retailers and hardware distributors. Leading platforms like NVIDIA’s official website, Amazon, Newegg, and specialized enterprise hardware suppliers like Dell, HP, and Supermicro often have NVIDIA AI chips for sale. Prices may vary based on location, availability, and whether you’re buying new or refurbished units.

How to Choose the Right GPU for AI Training

When it comes to AI training, the amount of VRAM determines whether the training can be done, while the performance level dictates how fast it will run. It’s important to note that GPUs from different generations cannot be directly compared in terms of performance.

Key parameters to consider when selecting a GPU

VRAM Size: Prioritize GPUs with 8GB or more of VRAM. This directly affects the size of the models and batch sizes that can be trained.

Performance Level: Choose based on your needs; the higher the performance, the better, but always consider cost-effectiveness.

Brand and After-Sales Service: Prefer well-known brands like ASUS, MSI, or Gigabyte. Alternatively, brands like Maxsun, Colorful, and Galaxy can also be considered. Be cautious when buying GPUs from other brands (brand suggestions are for reference, and users can select based on their actual needs).

Key Parameters to Check: GPU information can be viewed using tools like GPU-Z. Pay special attention to two parameters: Shaders and Memory Size. Shaders correspond to performance (i.e., the number of CUDA cores), while Memory Size is the amount of VRAM. Tools like GPU-Z can help check these.

Bus Width: Be aware that a bus width under 192 bits may cause transmission bottlenecks.

In addition to the above, the following aspects are also worth considering:

Cooling Performance: A good cooling system ensures long-term stability and performance.

Power Consumption and Power Supply Requirements: Make sure the system power supply can provide sufficient wattage and has the appropriate connectors.

Interface Compatibility: Check the compatibility of the GPU with the motherboard’s PCIe version and slot size.

Size and Space: Ensure the case has enough room for the GPU.

Multi-GPU Setup: Consider the need and compatibility for SLI or CrossFire configurations.

Budget and Cost-Effectiveness: Compare performance, price, and long-term usage costs.

Future Compatibility and Upgradability: Choose GPUs from manufacturers that provide strong support and regular driver updates.

Choose a suitable GPU based on your specific application and budget

Entry-Level

For beginners or small-scale projects, budget-friendly consumer-grade GPUs such as NVIDIA’s GeForce RTX 3060 or 3070 series are a good choice. Although primarily targeted at gamers, these GPUs still offer enough CUDA cores and good memory bandwidth for entry-level AI model training.

Mid-Level

For medium-sized projects that require higher computational power, GPUs like the NVIDIA RTX 3080, RTX 3090, or RTX 4090 are better choices. They offer more CUDA cores and larger memory capacities, which are necessary for training more complex models.

High-End and Professional-Level

For large-scale AI training tasks and enterprise-level applications, it is recommended to opt for professional GPUs like NVIDIA’s A100 or V100. These GPUs are designed specifically for AI training and high-performance computing (HPC), offering immense computational power, extremely high memory bandwidth and capacity, and support for mixed-precision training. While expensive, they provide unparalleled training efficiency and speed.

Conclusion

NVIDIA’s AI chips have become the standard for powering AI, deep learning, and high-performance computing workloads. From the NVIDIA T4 to the latest H200 based on the Hopper architecture, these GPUs are designed to deliver unmatched performance for AI researchers, enterprises, and cloud services.

When selecting the right GPU for your AI applications, it’s important to consider both the performance needs and price of the chip. With a variety of options ranging from the budget-friendly T4 to the powerful H200 and H100, NVIDIA offers AI chips for every budget and performance requirement. If you’re looking to take your AI projects to the next level, investing in an NVIDIA AI GPU will provide the computational power you need to tackle even the most complex AI and machine learning challenges.