The NVIDIA A40 GPU is part of NVIDIA’s Ampere-based lineup, designed for data centers, AI workloads, and high-performance computing (HPC) applications. It’s a powerful graphics card tailored to professional users who require exceptional computational performance. This article explores the A40 GPU’s specs, price, performance benchmarks, and compares it to other high-end NVIDIA GPUs like the RTX 4090, A100 and A6000, along with its relationship to the L40 and successor models.

Table of Contents

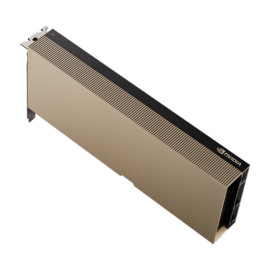

ToggleWhat is the NVIDIA A40 GPU?

The NVIDIA A40 GPU is built on the NVIDIA Ampere architecture, offering substantial improvements in performance over previous generations. Targeted towards the enterprise market, it excels in AI, machine learning, deep learning, data analytics, and scientific computing applications. The A40 GPU is optimized for demanding tasks such as training and inference for AI models, real-time graphics rendering, and simulation workloads.

The A40 supports technologies like NVIDIA RTX, CUDA, and NVLink, enabling users to harness the power of GPU-accelerated computing.

NVIDIA A40 Price

The price of the NVIDIA A40 GPU is typically around $5,000 to $6,000, depending on the retailer, availability, and market conditions. While the A40 may be considered expensive, it provides significant value for professionals and businesses looking to leverage its powerful computational abilities for AI and HPC workloads. For those needing enterprise-grade GPUs with advanced features, the A40 is positioned as a relatively affordable solution compared to other high-end GPUs like the A100 or A6000.

A40 GPU Memory

The NVIDIA A40 is equipped with 48GB of GDDR6 memory, providing ample bandwidth and storage for large AI models, simulations, and rendering tasks. The large memory capacity ensures that the A40 can handle large-scale workloads without running into memory bottlenecks. The high-bandwidth memory enables efficient data processing and supports the GPU in achieving its peak performance in AI and deep learning applications.

A40 GPU Benchmark

Benchmark tests reveal that the A40 GPU excels in both AI and HPC workloads. For AI model training, it delivers a substantial performance boost over its predecessors. In terms of raw performance, it achieves up to 20% higher throughput than the A10 and up to 40% faster performance compared to the T4, making it one of the most powerful GPUs in the mid-range price bracket.

In computational benchmarks, the A40 is able to deliver excellent performance for tasks such as matrix multiplication, deep learning training, and inference. Its ability to handle large datasets makes it ideal for high-performance computing (HPC) applications.

NVIDIA A40 GPU Specs

- Architecture: NVIDIA Ampere

- CUDA Cores: 10,752

- Memory: 48GB GDDR6

- Memory Bandwidth: 696.5 GB/s

- Tensor Cores: 336

- NVLink Support: Yes

- CUDA Version: 11.2

- Form Factor: PCIe 4.0

- TDP: 300W

These specifications place the A40 firmly in the high-performance category, offering the memory capacity and computational cores necessary for intensive tasks like deep learning, scientific simulations, and rendering.

NVIDIA A40 vs. RTX 4090: Key Differences

When comparing the NVIDIA A40 and the RTX 4090, it’s important to consider their target use cases, performance capabilities, and architectural differences. Both GPUs are designed for high performance, but they cater to distinct user needs: the A40 is aimed at enterprise and professional workloads, while the RTX 4090 is optimized for gaming and creative tasks.

1. Purpose and Target Audience

- NVIDIA A40: Designed for data centers, AI training, virtual desktops, and professional visualization. It excels in rendering, 3D design, and large-scale computations.

- RTX 4090: Primarily built for gaming enthusiasts and content creators. It offers exceptional performance in 4K gaming and creative applications such as video editing and 3D modeling.

2. Performance and Specifications

- NVIDIA A40:

- CUDA Cores: 10,752

- Memory: 48GB GDDR6 ECC

- TDP: 300W

- Key Features: Supports NVIDIA vGPU technology, multi-instance GPUs, and error correction for enhanced reliability in mission-critical environments.

- RTX 4090:

- CUDA Cores: 16,384

- Memory: 24GB GDDR6X

- TDP: 450W

- Key Features: Advanced ray tracing and DLSS 3.0 for superior gaming visuals, along with a focus on real-time performance.

3. Workload Optimization

- The A40 is optimized for workloads requiring long-term stability and precision, such as medical imaging, scientific simulations, and digital twins. It can be passively cooled, making it ideal for rack-mounted servers.

- The RTX 4090, on the other hand, is built to deliver peak performance in short bursts, perfect for gaming and creative workflows. Its active cooling system is designed for desktops.

4. Pricing and Availability

- NVIDIA A40: Significantly more expensive due to its enterprise-grade features and support, often found in the $4,000–$6,000 range.

- RTX 4090: Aimed at individual consumers and priced around $1,600–$2,000.

5. Conclusion

Choosing between the NVIDIA A40 and the RTX 4090 depends on your specific needs. If you require a GPU for professional and enterprise workloads with high reliability and memory capacity, the A40 is the better choice. However, for gaming, creative content creation, and real-time performance, the RTX 4090 offers unparalleled value.

NVIDIA A40 vs A100

The NVIDIA A40 and A100 GPUs both belong to the Ampere architecture, but the A100 is a higher-end model designed for the most demanding AI and machine learning workloads.

- CUDA Cores: The A100 has 6,912 CUDA cores, while the A40 has 10,752, giving the A40 more raw computational power.

- Memory: The A100 has 40GB or 80GB of high-bandwidth memory (HBM2), while the A40 features 48GB of GDDR6 memory.

- Performance: The A100 is optimized for training large AI models and has higher tensor performance, making it better suited for extremely large-scale deep learning tasks. In contrast, the A40 excels in medium-to-large-scale workloads but is more affordable.

While the A100 is the go-to choice for top-tier AI researchers, the A40 strikes a balance between performance and price, making it ideal for professionals who need excellent performance without the A100’s hefty price tag.

NVIDIA A40 vs A6000

The NVIDIA A6000 is another powerful GPU from NVIDIA’s lineup, aimed at professional workstations and enterprise applications. While both the A40 and A6000 share similar architectures, there are key differences:

- Memory: The A6000 boasts a massive 48GB of GDDR6 memory, similar to the A40, but also comes with higher memory bandwidth and more advanced cooling systems.

- CUDA Cores: The A6000 has 10,752 CUDA cores, matching the A40 in this regard.

- Target Audience: The A6000 is more suited to users in the creative, entertainment, and data science industries who need the highest level of performance, including graphics professionals and rendering specialists.

The A40 is more affordable than the A6000, making it the better choice for enterprises and professionals who don’t need the ultra-high-end performance of the A6000.

What is the GPU Profile of A40?

The GPU profile of the A40 focuses on delivering high throughput for AI, deep learning, and HPC tasks, alongside excellent graphics rendering capabilities. The GPU’s architecture is highly parallel, allowing for efficient processing of large datasets. With over 10,000 CUDA cores and 48GB of GDDR6 memory, the A40 can handle parallel workloads with ease.

The A40 also supports Tensor Cores, which are specialized for deep learning computations. This makes the A40 a suitable option for training AI models, performing simulations, and other high-performance computing tasks.

What is the Successor of the NVIDIA A40?

The successor to the NVIDIA A40 is the NVIDIA A50 GPU (likely to be based on the future Ada Lovelace architecture or another iteration of Ampere). The A50 GPU is expected to offer improved performance, more memory options, and better power efficiency, although official specs have not been released at the time of writing. The transition from the A40 to the A50 will likely bring advancements in AI and machine learning model training, further enhancing the A40’s capabilities for large-scale data processing.

What is the Difference Between A40 and L40 GPUs?

The NVIDIA L40 GPU is another upcoming model in the professional GPU space. While the A40 focuses on general-purpose AI and HPC workloads, the L40 is expected to be optimized for data center applications and enterprise-level AI deployment. The L40 will likely feature higher memory bandwidth, more CUDA cores, and greater optimization for distributed AI workloads, setting it apart from the A40.

The A40 is already a formidable performer, but the L40 will likely cater to users who require extreme AI performance and are willing to invest in the latest technologies.

NVIDIA A40 CUDA Cores

The A40 GPU is equipped with 10,752 CUDA cores, making it capable of handling complex parallel computing tasks. CUDA cores are the primary processing units of NVIDIA GPUs and are essential for running computations in AI, scientific simulations, and real-time rendering.

The sheer number of CUDA cores enables the A40 to deliver impressive performance in AI model training, inference, and data processing tasks.

NVIDIA A40 Datasheet

For more detailed specifications and technical data, users can refer to the NVIDIA A40 datasheet, which outlines the GPU’s architecture, performance metrics, power requirements, and supported features. It includes all the details that professionals need to implement the A40 in their computing setups.

Conclusion

The NVIDIA A40 GPU is a powerful and affordable option for AI, machine learning, deep learning, and high-performance computing workloads. With 48GB of memory, over 10,000 CUDA cores, and excellent performance benchmarks, it sits comfortably between more affordable options like the A10 and higher-end models like the A100. When compared to the A6000, it offers similar features but at a more budget-friendly price point, making it a go-to choice for enterprises and professionals who need solid performance without breaking the bank.