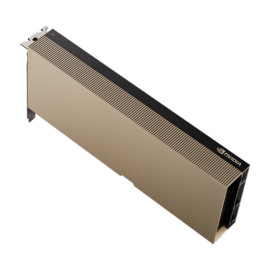

The NVIDIA A100 Tensor Core GPU is one of the most powerful graphics processing units (GPUs) designed for high-performance computing (HPC), artificial intelligence (AI), deep learning, and data center workloads. With its advanced Tensor Core technology, the A100 is built to accelerate machine learning tasks, deep learning model training, and complex simulations. In this article, we’ll explore the NVIDIA A100 Tensor Core GPU specs, its price, and how it compares to other GPUs like the RTX 4090. We’ll also answer the question: Can the NVIDIA A100 be used for gaming?

Table of Contents

ToggleWhat is the NVIDIA A100 Tensor Core GPU?

The NVIDIA A100 Tensor Core GPU is part of the Ampere architecture, NVIDIA’s next-generation platform for AI, machine learning, and deep learning. The A100 is specifically designed to handle the massive computational requirements of AI workloads. It includes Tensor Cores that accelerate deep learning tasks, providing up to 20x higher performance compared to previous-generation GPUs in AI-specific tasks.

The A100 is commonly used in data centers, AI research, and enterprise-level computing, where raw computational power and scalability are crucial for processing large datasets and training complex neural networks. The A100 excels in tasks such as training large deep learning models, running simulations, and AI inference.

NVIDIA A100 Tensor Core GPU Specs

Here are the key specs of the NVIDIA A100 Tensor Core GPU:

- CUDA Cores: 6,912 CUDA cores

- Tensor Cores: 432 third-generation Tensor Cores for deep learning acceleration

- GPU Architecture: Ampere

- Memory: 40GB or 80GB of HBM2 memory (High Bandwidth Memory 2)

- Memory Bandwidth: 1.6TB/s

- Compute Power: Up to 312 teraflops for deep learning (for FP16 operations)

- Form Factor: Available in both PCIe and SXM4 configurations

The A100’s combination of high memory bandwidth and Tensor Cores makes it exceptionally powerful for AI and machine learning applications. Its ability to handle both training and inference workloads with ease sets it apart from many consumer-focused GPUs.

NVIDIA A100 80GB Price

The NVIDIA A100 80GB price varies depending on the retailer and availability. On average, the A100 80GB model is priced around $15,000 to $20,000. The cost of this powerful GPU reflects its enterprise-grade performance and is typically purchased by large organizations, research institutions, and cloud service providers that need top-tier performance for AI and machine learning workloads. Prices can fluctuate based on demand, availability, and the specific configuration (PCIe vs. SXM4).

NVIDIA A100 Tensor Core GPU Benchmark

The NVIDIA A100 benchmark demonstrates its unmatched performance for AI and machine learning tasks. Here are some key performance metrics based on benchmarks:

- AI Training Performance: The A100 provides up to 2.5x the performance of the previous-generation V100 and up to 7x the performance of the P100 in certain deep learning benchmarks.

- Deep Learning FP16 Throughput: The A100 can achieve up to 312 teraflops of AI training performance for FP16 (half-precision floating-point) operations, making it ideal for training large-scale neural networks.

- AI Inference: The A100 can accelerate inference tasks with exceptional speed and efficiency, delivering up to 200 teraflops of throughput for TensorFlow, PyTorch, and other machine learning frameworks.

These benchmarks make the A100 a go-to GPU for AI research, large-scale machine learning model development, and high-performance computing applications.

NVIDIA A100 CUDA Cores

The NVIDIA A100 CUDA Cores number 6,912, which is a significant increase over the previous-generation V100 and P100 GPUs. CUDA cores are the basic computational units of NVIDIA GPUs, responsible for performing parallel processing tasks. In the A100, the large number of CUDA cores enables exceptional computational power, making it ideal for tasks such as deep learning training, simulations, and high-performance computing applications.

The A100’s CUDA cores, combined with the Tensor Cores and high memory bandwidth, allow it to achieve unparalleled efficiency and performance in AI and HPC workloads.

NVIDIA A100 Tensor Core GPU Architecture

The NVIDIA A100 Tensor Core GPU architecture is based on the Ampere microarchitecture, which is built specifically for AI and deep learning workloads. The A100 features third-generation Tensor Cores, which significantly improve performance for matrix operations, a fundamental operation in deep learning algorithms.

Key aspects of the A100’s architecture include:

- Third-Generation Tensor Cores: These Tensor Cores accelerate AI operations such as matrix multiplication and convolution, enabling up to 20x faster training compared to previous-generation GPUs for AI workloads.

- Multi-Instance GPU (MIG): The A100 supports multi-instance GPU technology, which allows users to partition the GPU into multiple smaller instances. This enables better GPU utilization and allows the A100 to handle multiple workloads simultaneously, making it highly efficient for data centers and cloud environments.

- Unified Memory: The A100 offers unified memory support, which makes it easier to manage large datasets in AI training tasks. This feature allows the GPU to access CPU memory without needing explicit data movement between the two, improving overall performance.

These architectural improvements give the A100 a significant edge in AI and machine learning tasks, providing unrivaled performance and scalability.

NVIDIA A100 vs RTX 4090

The NVIDIA A100 vs RTX 4090 comparison highlights the differences between these two powerful GPUs, each designed for different use cases:

- Target Use Case: The A100 is designed primarily for AI, deep learning, and high-performance computing tasks in data centers and research labs. In contrast, the RTX 4090 is geared towards gaming, content creation, and consumer-level applications, though it can also handle some AI tasks.

- Performance: The A100 offers superior performance for AI and machine learning workloads, with specialized Tensor Cores and support for multi-instance GPU (MIG). The RTX 4090, while extremely powerful in terms of gaming and rendering, is not optimized for AI and deep learning tasks at the same level as the A100.

- Price: The RTX 4090 is priced around $1,500 to $2,000, making it far more affordable than the A100, which costs $15,000 or more for the 80GB variant.

If you’re focused on AI research, data center applications, or machine learning model training, the A100 is the clear winner. However, if you’re a gamer or content creator looking for a high-performance GPU, the RTX 4090 provides exceptional performance for ray tracing, 4K gaming, and 3D rendering.

Can the NVIDIA A100 Be Used for Gaming?

While the NVIDIA A100 is an incredibly powerful GPU, it is not designed for gaming. The A100 is optimized for AI and deep learning workloads, with features like Tensor Cores and high memory bandwidth tailored to accelerate AI tasks. While technically, you could use the A100 for gaming, it would be overkill for most gaming applications and would not provide a better gaming experience than a high-end consumer GPU like the RTX 4090 or RTX 4080.

The A100 is built for performance in data centers and enterprise AI systems, where power consumption, efficiency, and cost are justified by the need for rapid AI processing. For gaming, GPUs like the RTX 4090, RTX 4080, or even older models like the RTX 3080 or RTX 3070 are far more suitable and affordable.

Conclusion

The NVIDIA A100 Tensor Core GPU is a powerhouse designed to meet the demands of AI, machine learning, and high-performance computing. With its impressive CUDA cores, Tensor Core architecture, and massive memory bandwidth, the A100 is capable of handling some of the most challenging computational tasks at scale. Its price point, however, reflects its enterprise-level performance, making it best suited for organizations, researchers, and data centers.

While it offers superior performance for AI tasks, the NVIDIA A100 vs RTX 4090 comparison shows that for gaming, the RTX 4090 is the better choice. For AI professionals looking to push the boundaries of what’s possible in deep learning and data science, the A100 remains one of the best GPUs available on the market today.