In AI training, hardware and software play a crucial role. Whether it’s training large language models or other types of AI models (such as computer vision, reinforcement learning, recommendation systems, etc.), powerful computational capabilities and efficient resource management are essential. This blog will explains how is AI Created, hardware and software required for AI training and how they made AI.

Table of Contents

Toggle1. Hardware and Software Requirements for AI Training

1.1 Hardware Requirements

AI training typically relies on the following hardware components:

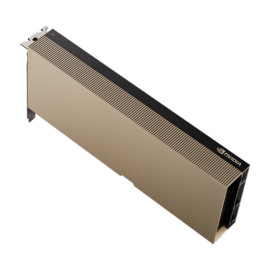

- GPU (Graphics Processing Unit) / TPU (Tensor Processing Unit): These hardware accelerators are primarily used to perform large-scale matrix computations, accelerating the training of deep learning models. Common GPUs include NVIDIA A100, H100, and Google’s TPU v4. Different AI tasks (such as image recognition, natural language processing, reinforcement learning, etc.) may have varying hardware requirements.

- CPU (Central Processing Unit): In AI training, the CPU is responsible for data preprocessing, task scheduling, and model management. Most servers are equipped with high-performance multi-processor CPUs like Intel Xeon or AMD EPYC.

- Memory (RAM): AI training requires substantial memory capacity to store intermediate calculations and temporary data. Typically, the memory needed is 512GB or more, and can even reach several terabytes depending on the size and complexity of the model.

- Storage (SSD/HDD): Storage is required for storing training data and models. High-speed NVMe SSDs are recommended to support rapid data reading and writing.

- High-Speed Interconnect (NVLink / InfiniBand): These are used for data transfer between GPUs and between servers, enhancing computational efficiency. NVLink is used for GPU-to-GPU interconnect within a single server, while InfiniBand is used for high-speed communication between multiple servers.

1.2 Software Requirements

AI training involves multiple layers of software, including:

- Deep Learning Frameworks: Frameworks like TensorFlow, PyTorch, and JAX provide tools for model development and training. These frameworks support features like automatic differentiation and distributed training.

- CUDA/cuDNN: These are NVIDIA’s GPU-acceleration libraries that optimize deep learning computations. CUDA is the parallel computing platform, and cuDNN is the deep learning acceleration library.

- MPI (Message Passing Interface): Used for multi-node training communication, such as Horovod, which facilitates efficient communication of distributed training tasks.

- Distributed File Systems: Systems like Lustre and Ceph offer efficient data access, supporting the storage and retrieval of large-scale datasets.

2. Relationship Between Servers and GPUs

2.1 Server and GPU Architecture

In AI training, multiple servers and GPUs often work together.

- GPUs are installed inside servers, with a single server typically hosting 4-8 GPUs, or even more.

- Multiple servers form a compute cluster connected via InfiniBand or Ethernet, enhancing training capability.

2.2 Components of a Server

An AI server typically includes:

- CPU (Dual/Quad Processor): Responsible for task scheduling and data preprocessing.

- GPU (Up to 8 or more): Used for deep learning computations, such as NVIDIA A100, H100.

- Memory (512GB or more): Stores data needed during computation.

- High-Speed Storage (NVMe SSD): Allows fast reading of training data, supporting high throughput and low latency.

- High-Speed Interconnect (NVLink / PCIe): Enhances data transfer efficiency between GPUs. NVLink is used for high-speed communication between GPUs, while PCIe is used for communication between GPUs and CPUs.

- Network Interface (InfiniBand): Used for server-to-server communication, supporting high-bandwidth and low-latency data transfer.

3. Distribution of Training Tasks and GPU/TPU Resource Management

3.1 Definition of Training Tasks

AI training tasks generally include:

- Data Loading: This involves preprocessing, augmentation, and batching operations on the dataset.

- Forward Propagation: The calculation of the model’s output and loss value.

- Backward Propagation: The computation of gradients and updates to model parameters.

- Model Checkpointing: Periodically saving the model state for recovery in case of interruptions during training.

3.2 How Tasks Are Allocated to GPUs/TPUs

In multi-GPU/TPU training, tasks are distributed using distributed computing strategies:

- Data Parallelism: Each GPU processes a different batch of data, commonly used for large batch training, such as with BERT or GPT models.

- Model Parallelism: Different GPUs handle different parts of the model, suitable for extremely large models, such as GPT-4.

- Pipeline Parallelism: Training tasks are split into stages, improving computational efficiency.

Task scheduling is typically managed automatically using frameworks like PyTorch Distributed or TensorFlow MirroredStrategy to efficiently manage GPU resources.

4. Role of NVLink and InfiniBand and Their Connectivity

4.1 NVLink: GPU Interconnect Within a Server

What is NVLink?

NVLink is NVIDIA’s high-speed GPU interconnect technology, offering up to 7 times the speed of PCIe. PCIe 5.0 can reach up to 128GB/s, while NVLink can go up to 900GB/s, making it suitable for high-end GPUs like the NVIDIA A100, H100, and RTX 6000.

NVLink Devices and Installation

- NVLink Bridge (Small Scale, 2-4 GPUs): Suitable for RTX 4090/A6000, directly connecting GPUs through the NVLink ports.

- NVSwitch (Large Scale, 8+ GPUs): Used in DGX servers, installed on the motherboard and connected to GPUs via NVLink cables.

4.2 InfiniBand: High-Speed Interconnect Between Servers

What is InfiniBand?

InfiniBand is a high-speed network used for communication between multiple servers, offering speeds up to 10 times faster than Ethernet. It supports 100G/200G/400G rates with latency as low as 1-2 microseconds, commonly used in large AI training clusters like NVIDIA DGX POD and SuperPOD.

Main Components:

- InfiniBand NIC (Host Channel Adapter, HCA): Such as the Mellanox ConnectX-7.

- InfiniBand Switch: For example, the Mellanox Quantum-2.

InfiniBand Connectivity:

- Single Server with InfiniBand NIC: Servers can install Mellanox ConnectX-7 PCIe 5.0 400G NICs, optimizing data transfer through RDMA (Remote Direct Memory Access).

- Multiple Servers Connected via InfiniBand Switch: Each server is connected to an InfiniBand switch via optical cables, providing 400G ports for low-latency data transfer.

5. NVLink vs. InfiniBand Comparison

| Feature | NVLink | InfiniBand |

|---|---|---|

| Function | GPU-to-GPU interconnect | Server-to-server communication |

| Bandwidth | 900GB/s (with NVSwitch) | 400G (InfiniBand) |

| Connecting Devices | GPU ↔ GPU | Server ↔ Server |

| Use Case | Single AI server (DGX/Workstation) | Large-scale AI training clusters (SuperPOD) |

| Hardware | NVLink Bridge / NVSwitch | Mellanox ConnectX-7 / Quantum-2 Switch |

6. The Complete Process of AI Training

6.1 Defining the Problem and Setting Goals

- Task Definition: The first step is to clarify what the AI needs to do. For example, is it identifying whether a picture contains a cat? Or is it predicting the price of a stock in the future?

- Goal Setting: Once the task is defined, we set clear objectives. For instance, you might want the model to correctly identify cats in 90% of 1000 images.

6.2 Data Collection and Preparation

- Data Collection: AI models need data to learn from. For instance, if you’re teaching an AI to recognize cats, you’ll need a lot of pictures of cats (and some pictures without cats). These data can come from websites, company databases, or open datasets.

- Data Cleaning: The data might contain errors or missing parts. For example, some images may be unclear, or labels might be wrong. We need to clean up this data to make sure the AI learns from accurate information.

- Data Labeling: The AI needs to know what it’s looking at—whether it’s a cat or not. These are called “labels.” In supervised learning, each data point needs to have the correct label attached.

- Data Augmentation: If there’s not enough data, we can increase the variety of training examples by performing simple transformations like rotating or changing the brightness of images. This helps the model learn more diverse features and avoid “overfitting” (getting too attached to the specifics of a small set of data).

- Normalization/Standardization: Data can come in different ranges. Some values may be very large, others very small. To make learning easier for the AI, we scale the data to a similar range, like 0 to 1.

6.3 Choosing the Model and Designing Architecture

- Choosing an Algorithm: Different tasks require different algorithms. For example, for image recognition tasks, we might choose deep learning models like convolutional neural networks (CNNs). For tasks like predicting an event, we might use decision trees.

- Designing the Network Structure: In deep learning, the “structure” of the model is very important. It’s like building a house—you need to design the framework, deciding how many layers there are and how many units each layer has. In AI, this means selecting the right architecture (e.g., CNN, RNN, Transformer).

- Setting Hyperparameters: Hyperparameters are settings you need to configure before training, like the learning rate (how fast the model learns), the number of training epochs (how many times the model sees the data), etc. Think of this like cooking—you control the heat and cooking time to make the food come out just right.

6.4 Training the Model

- Initializing Model Parameters: Before training, the model’s parameters (such as weights that help the model decide things like “cat” or “not cat”) are set randomly. The model will adjust these during training.

- Forward Propagation: This is where the model makes a prediction. For example, you input an image, and the model gives you a prediction of whether the image contains a cat or not.

- Calculating Loss: The loss is the difference between the model’s prediction and the true label. If the model says no cat, but there is a cat in the image, the loss is large. We want to minimize this loss as much as possible.

- Backpropagation: This is when the model adjusts its internal parameters (weights) to improve its predictions. The model uses the loss to figure out which parts of it need to be fixed. It’s like adjusting the temperature on a stove based on how your dish is turning out.

- Iterative Training: The model goes through this process multiple times (called epochs), continuously adjusting its parameters, to improve its ability to predict accurately.

6.5 Model Validation and Tuning

- Validation Set Evaluation: To avoid the model “memorizing” the training data (a problem known as overfitting), we use a separate set of data (the validation set) to check if the model is performing well.

- Hyperparameter Tuning: Sometimes we need to tweak some of the hyperparameters (e.g., learning rate, number of layers) to get better performance. This is often done through techniques like cross-validation or grid search.

- Regularization: Regularization helps prevent overfitting by making the model simpler and less likely to memorize specific data points. Techniques like L2 regularization or dropout can be used to achieve this.

6.6 Model Testing and Evaluation

- Testing Set Evaluation: After training, we use a fresh set of data (test set) that the model hasn’t seen before. This tests the model’s ability to generalize to new data.

- Evaluation Metrics: The specific metric used to evaluate the model depends on the task. For classification tasks, we might use accuracy, precision, recall, or F1 score. For regression tasks, we might use error metrics like Mean Squared Error (MSE).

6.7 Deployment and Production

- Model Optimization: To make the model run faster and more efficiently in real-world applications, we might optimize it. This can involve reducing the model size, using hardware acceleration, or simplifying computations.

- Deploying the Model: The trained model is then deployed into production. This could be in a cloud service, embedded in a mobile app, or even on a physical device.

- Monitoring and Maintenance: Once the model is live, we need to monitor its performance to ensure it continues to work well. If its performance drops over time (due to new data patterns or changes in the environment), we may need to update or retrain the model.

6.8 Model Updates and Iteration

- Continuous Learning: As new data comes in, we can continue training the model to help it adapt to changing patterns and stay effective.

- Re-training: If the model’s performance starts to degrade or the task changes, we may need to re-train it or fine-tune it on newer data.

In summary, AI training is like teaching a computer by giving it examples and guiding it through adjustments until it becomes capable of making accurate predictions. The process involves preparing data, choosing the right model, training it, and continuously improving it to perform well in real-world scenarios.

7. Conclusion

AI training relies on high-performance hardware, including GPUs, CPUs, NVMe SSDs, and InfiniBand. NVLink is used within servers to interconnect GPUs and improve computational efficiency, while InfiniBand connects servers in a cluster, enhancing large-scale training capabilities. Distributed computing strategies ensure efficient resource utilization across GPUs/TPUs.

🚀 Combining NVLink and InfiniBand helps build the most powerful AI training architectures!