As deep learning continues to revolutionize fields such as AI research, computer vision, and natural language processing, choosing the right GPU is critical for optimizing training times and improving model accuracy. NVIDIA, as a leader in the AI hardware space, offers a wide range of GPUs that cater to different needs—from entry-level deep learning tasks to large-scale, enterprise-level AI workloads. In this article, we’ll explore the top NVIDIA GPUs for deep learning, including models like the RTX 4090, H100, A100, L40, and more.

Table of Contents

ToggleIs the NVIDIA RTX 4090 Good for Deep Learning?

The NVIDIA RTX 4090 is a powerhouse for deep learning and AI applications. Featuring the Ada Lovelace architecture, the RTX 4090 comes with 24GB of GDDR6X VRAM and 16,384 CUDA cores, making it a top choice for those working with complex models and large datasets. The GPU’s Tensor cores provide significant acceleration for matrix multiplications, which are crucial for deep learning model training.

For those involved in high-end AI research, the RTX 4090 excels in image classification, natural language processing, and generative adversarial networks (GANs). It also supports ray tracing and AI-enhanced graphics, making it a versatile tool for AI-based simulations and deep learning tasks.

In terms of deep learning GPU benchmarks, the RTX 4090 is considered one of the fastest options for both training and inference, with remarkable performance across various AI frameworks, including TensorFlow and PyTorch.

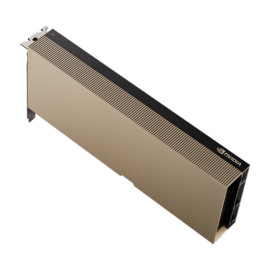

NVIDIA L40 & L40S for Deep Learning

The NVIDIA L40 and L40S are part of NVIDIA’s L-series of GPUs, which are designed for high-performance AI workloads, data centers, and enterprise applications. These GPUs provide superior memory bandwidth and support for Tensor Cores, which are optimized for AI and deep learning operations.

The L40 model is built on the Ada Lovelace architecture and comes with 48GB of GDDR6 memory, making it highly suitable for large-scale deep learning tasks such as reinforcement learning, training large neural networks, and AI research in general. The L40S, a slightly enhanced version, offers additional improvements in terms of cooling and power efficiency, making it an excellent choice for data center deployment.

Both GPUs offer a massive leap in terms of parallel processing power, which is essential for scaling up AI models and training complex algorithms faster and more efficiently.

NVIDIA L20 and 6000 Ada: Entry-Level AI GPUs

For those just starting in deep learning or working with less complex models, the NVIDIA L20 and 6000 Ada can be more than enough to get the job done. While these GPUs are not as powerful as their higher-end counterparts like the RTX 4090 or L40, they are still highly capable for smaller-scale tasks.

The L20 GPU provides a balanced combination of performance and memory for training small neural networks and running machine learning models in a cost-effective manner. The 6000 Ada is also built on the Ada Lovelace architecture but is designed for mid-range workloads, offering excellent value for those working on smaller AI projects or experimenting with deep learning techniques.

Both of these GPUs allow for easy entry into the world of deep learning while still providing solid performance.

Is the NVIDIA H100 Good for Deep Learning?

The NVIDIA H100 is one of the most powerful GPUs in the market, especially designed for large-scale deep learning and high-performance computing (HPC) applications. Built on the Hopper architecture, the H100 GPU offers up to 80GB of HBM3 memory, which is crucial for handling large datasets and models.

For deep learning practitioners, the H100 is the ultimate GPU for training and running complex models. It provides breakthrough performance for tasks such as large-scale natural language processing (NLP), multi-modal AI, and scientific simulations. The H100 also introduces Transformer Engine technology, which specifically accelerates transformer-based architectures that are commonly used in NLP and generative models.

In terms of pricing, the H100 is on the higher end of the spectrum but offers unrivaled performance, making it ideal for research labs, AI startups, and data centers that require the most powerful deep learning GPU available.

NVIDIA A100 and A5000: Data Center-Grade GPUs

The NVIDIA A100 has long been a top choice for deep learning practitioners, particularly in data center environments. Built on the Ampere architecture, the A100 comes with 40GB or 80GB of HBM2 memory, making it highly capable for handling large datasets and training highly complex models.

The A100 is known for its Tensor cores, which accelerate machine learning tasks such as matrix multiplications and convolutions. It’s especially suitable for high-performance computing (HPC), data analytics, and AI model training at scale.

On the other hand, the NVIDIA A5000 is a more affordable yet still highly capable GPU for deep learning workloads. It features 24GB of GDDR6 memory and offers excellent performance for smaller data sets, as well as real-time inference tasks. The A5000 is a great choice for professionals working on AI applications where performance and cost need to be balanced.

NVIDIA A4000 and H200 for AI Research

The NVIDIA A4000 is another great option for those who need a mid-range GPU for deep learning applications. With 16GB of GDDR6 memory, it’s capable of handling a wide range of machine learning tasks, from training smaller models to real-time inference. While it doesn’t have the sheer power of the A100 or H100, the A4000 is a cost-effective solution for professionals looking for a capable GPU without breaking the bank.

The H200, part of the newer generation of GPUs, is tailored for applications that require high-speed processing and memory-intensive tasks. Though it’s not as well-known as the A100 or H100, the H200 offers substantial performance improvements for AI workloads, particularly for research environments and data centers that require efficient computing power.

Deep Learning GPU Benchmarks

When selecting a GPU for deep learning, benchmarking is essential to evaluate performance. Some important metrics for GPU performance in deep learning include:

- Training Speed: How fast the GPU can train a model.

- Inference Speed: The ability to make predictions after training.

- Memory Bandwidth: The rate at which the GPU can transfer data to and from memory.

- FP32 Performance: Floating-point operations, which are critical for deep learning tasks.

In deep learning benchmarks, GPUs like the H100, RTX 4090, and A100 consistently outperform others in terms of raw computational power and speed. The L40 and A5000 also perform exceptionally well for their price, making them great choices for a range of deep learning tasks.

Conclusion: Which NVIDIA GPU Is Best for Deep Learning?

Choosing the best NVIDIA GPU for deep learning depends largely on your project’s scale and your budget. Here’s a quick breakdown of the top models:

- Best for Enterprise-Level AI: H100 and A100 – these are designed for massive deep learning workloads and high-performance computing, ideal for AI research labs and large-scale data centers.

- Best for High-End Consumer AI: RTX 4090 – offers fantastic performance for training and running AI models, making it suitable for professionals working on cutting-edge AI research and development.

- Best for Mid-Range AI Workloads: A5000, A4000, and L40 – these GPUs provide excellent value for deep learning enthusiasts and professionals who need a balance between performance and price.

- Best for Entry-Level AI Projects: L20 and 6000 Ada – perfect for beginners or small-scale deep learning tasks.

In the end, NVIDIA remains the leader in AI and deep learning hardware, with GPUs offering varying levels of performance to meet the needs of different users. Whether you’re just getting started or working on high-end research, there’s an NVIDIA GPU that fits your deep learning needs.