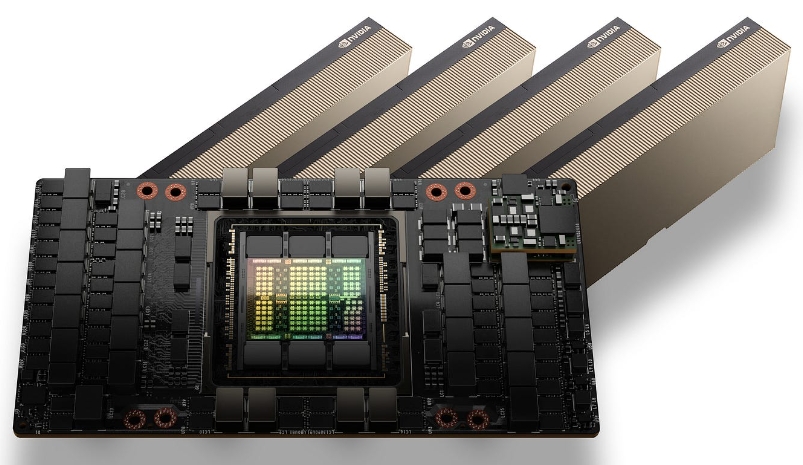

- NVIDIA H100 is a high-performance GPU designed for data center and cloud applications, optimized for AI workloads

- It is based on the NVIDIA Ampere architecture, has 640 Tensor Cores and 160 SMs, and has 2.5 times more computing power than the V100 GPU

- With 1.6TB/s memory bandwidth and PCIe Gen4 interface, it can efficiently handle large-scale data processing tasks

- Advanced features include Multi-Instance GPU (MIG) technology, enhanced NVLink, and enterprise-class reliability tools

Securely enhance workloads from enterprise to exascale levels.

Revolutionary AI Training

The H100 is equipped with fourth-generation Tensor Cores and a Transformer Engine utilizing FP8 precision, allowing it to train the GPT-3 (175B) model up to four times faster than its predecessor. This cutting-edge technology is paired with fourth-generation NVLink, providing 900 GB per second of GPU-to-GPU connectivity; the NDR Quantum-2 InfiniBand network for accelerated inter-GPU communication across nodes; PCIe Gen5; and NVIDIA Magnum IO? software. Collectively, these components facilitate effortless scalability from small enterprise configurations to large unified GPU clusters.

Deploying the H100 GPU at a datacenter scale unleashes remarkable performance, paving the way for the next generation of exascale high-performance computing (HPC) and tera-parameter AI, making these advanced tools available to researchers everywhere.

Discover NVIDIA AI and the NVIDIA H100 on NVIDIA LAUNCHPAD.

Real-Time Deep Learning Inference

AI utilizes a wide range of neural networks to tackle various business challenges. An effective AI inference accelerator must not only provide superior performance but also the flexibility to enhance different types of networks.

The H100 solidifies NVIDIA’s leadership in inference acceleration with improvements that increase inference speed by up to 30x while ensuring minimal latency. The fourth-generation Tensor Cores accelerate all precisions, including FP64, TF32, FP32, FP16, INT8, and the new FP8, optimizing memory usage and boosting performance without compromising the accuracy of large language models (LLMs).

The NVIDIA H100 80GB PCIe Core GPU is a state-of-the-art graphics card optimized for AI, machine learning, and data analytics. Featuring 80GB of high-bandwidth memory, it delivers unmatched performance and efficiency for complex computational tasks. Built on the Hopper architecture, the H100 excels in handling large models and datasets, making it perfect for researchers and developers pushing the boundaries of artificial intelligence. Its advanced capabilities in parallel processing and real-time inference empower users to accelerate their workloads and drive innovation in various applications.