This guide is intended for individual users. If you need enterprise-level on-premise deployment, please refer to another blog: DeepSeek Enterprise On-Premise Deployment & Customization Guide

Table of Contents

ToggleCheck your computer’s configuration to see which DeepSeek model is suitable for deployment

DeepSeek offers different models with varying parameters, and the more parameters they have, the higher the required computer configuration. The main configurations are System Memory (RAM) and Video Memory (VRAM). Use the following tools to check which DeepSeek models your device can run. https://tools.thinkinai.xyz/

Use Ollama to Deploy the Specified Version of DeepSeek

Once you’ve determined which model your device can support, we can start deploying DeepSeek with Ollama.

Installing Ollama

Ollama is a tool for running large models locally, and it supports downloading and running models on macOS, Linux, and Windows.

Here’s how to install Ollama:

- For macOS and Windows users, simply visit the Ollama website at ollama.com/download and download the appropriate installation package.

- For Linux installation:

curl -fsSL https://ollama.com/install.sh | sudo bash

sudo usermod -aG ollama $USER # Add user permissions

sudo systemctl start ollama # Start the serviceOnce Ollama is installed, you can check the version by running:

ollama -vIf the version information appears, the installation is successful.

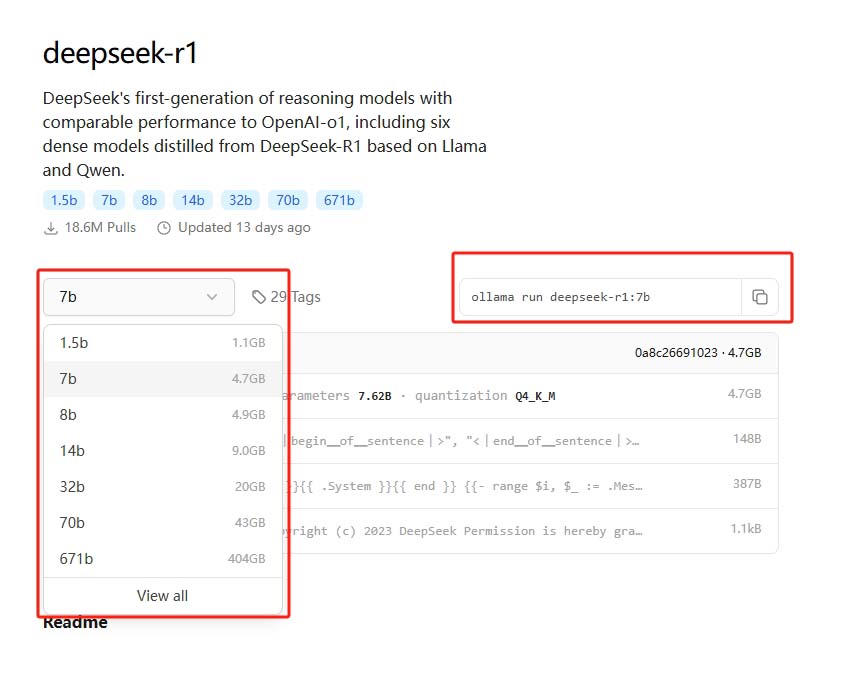

Using Ollama to Deploy DeepSeek

Visit the Ollama website at DeepSeek on Ollama, select the model parameters you need, copy the corresponding command, and execute it in the terminal to complete the deployment.

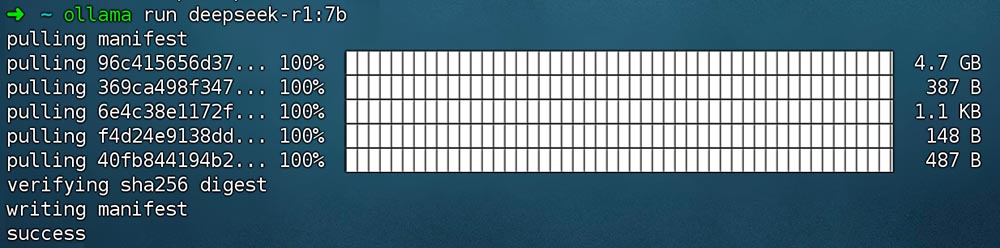

For Example: run the command ollama run deepseek-r1:7b to deploy the DeepSeek R1 model.

Once the deployment is complete, you can start chatting directly with DeepSeek in the terminal.

Deploying a Graphical Interface Client

After deploying DeepSeek, if you prefer to interact with the model using a graphical interface rather than through the command line, consider deploying one of the following GUI:

- ChatBox (Graphical Interface) supports both web and local clients.

- AnythingLLM (Graphical Interface)

- Open WebUI (Graphical Interface) supports web, similar to ChatGPT.

- Cherry Studio (Graphical Interface)

- Page Assist (Browser Extension) supports “online search”

For example, to use ChatBox, visit the official website at ChatBox to download the client. After installation, go to the settings in ChatBox, enter the Ollama API URL (http://localhost:11434 or https://127.0.0.1:11434), and the model name (e.g., deepseek-r1:7b), then save the settings. You can then open a new conversation and select the model to start chatting.

Conclusion

This guide has walked you through the process of deploying DeepSeek on your local computer, from checking your system’s compatibility to using Ollama for installation and deployment. Whether you’re interacting with DeepSeek through the terminal or setting up a graphical interface for a more user-friendly experience, you now have the tools to get started. With these steps, you can now begin exploring DeepSeek. Happy experimenting!